Loan Default Risk Assessment Using Machine Learning

The main objective of this project is to compare two popular supervised machine learning techniques classification with logistical regression and classification with random forest. We will be using a dataset of auto loans with features associated with the loan application process. Model evaluation will be performed on both techniques to compare accuracy. The data was first cleaned and feature engineering was conducted before starting the machine learning process.

Linear Classifier using Logistic Regression

Load Data and Import Libraries

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

import seaborn as sns

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

loan_df = pd.read_csv('../data/vehicle_loans_feat.csv', index_col='UNIQUEID')

Train/Test Split

The first step in building a machine learning model is to prepare the data. Here, categorical columns are converted to the 'category' data type to ensure they are handled correctly during modeling. A subset of relevant columns is selected for simplicity. The data is then split into training and test sets using train_test_split, ensuring that the model can be evaluated on unseen data, which is crucial for assessing its generalizability.

category_cols = ['MANUFACTURER_ID', 'STATE_ID', 'DISBURSED_CAT', 'PERFORM_CNS_SCORE_DESCRIPTION', 'EMPLOYMENT_TYPE']

loan_df[category_cols] = loan_df[category_cols].astype('category')

small_cols = ['STATE_ID', 'LTV', 'DISBURSED_CAT', 'PERFORM_CNS_SCORE', 'DISBURSAL_MONTH', 'LOAN_DEFAULT']

loan_df_sml = loan_df[small_cols]

x = loan_df_sml.drop(['LOAN_DEFAULT'], axis=1)

y = loan_df_sml['LOAN_DEFAULT']

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=42)

Variable Encoding

Many machine learning algorithms, including logistic regression, require numerical input. Therefore, categorical variables need to be encoded into numerical values. pd.get_dummies is used to perform one-hot encoding, which converts categorical variables into a series of binary columns. This step is essential for ensuring that the logistic regression model can process the data correctly.

loan_data_dumm = pd.get_dummies(loan_df_sml, prefix_sep='_', drop_first=True) x = loan_data_dumm.drop(['LOAN_DEFAULT'], axis=1) y = loan_data_dumm['LOAN_DEFAULT'] x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.3, random_state=42)

Train and Validate

The logistic regression model is instantiated and trained on the training data. The max_iter parameter is set to 200 to ensure the algorithm has enough iterations to converge. After training, the model is used to make predictions on the test set. The accuracy of the model is then calculated, providing a basic measure of performance. Although accuracy is a useful metric, it is important to consider other performance metrics for a comprehensive evaluation.

logistic_model = LogisticRegression(max_iter=200) logistic_model.fit(x_train, y_train) preds = logistic_model.predict(x_test) accuracy = logistic_model.score(x_test, y_test)

Evaluation Metrics

Next, I found various evaluation metrics such as the confusion matrix, precision, recall, and F1 score. The confusion matrix provides a detailed breakdown of true positives, true negatives, false positives, and false negatives. Precision measures the accuracy of positive predictions, recall measures the model's ability to identify actual positives, and the F1 score balances precision and recall. These metrics give a comprehensive view of the model's performance, highlighting its strengths and weaknesses.

preds = logistic_model.predict(x_test)

conf_mat = confusion_matrix(y_test, preds)

tn, fp, fn, tp = conf_mat.ravel()

print("True Negatives (Correct Non-Defaults): ", tn)

print("False Positives (Incorrect Defaults): ", fp)

print("False Negatives (Incorrect Non-Defaults): ", fn)

print("True Positives (Correct Defaults)", tp)

precision = precision_score(y_test, preds)

recall = recall_score(y_test, preds)

f1 = f1_score(y_test, preds)

print("Precision: ", precision)

print("Recall: ", recall)

print("F1 Score: ", f1)

Output

Precision: 0.75

Recall: 0.00029545006893834944

F1 Score: 0.0005906674542232723

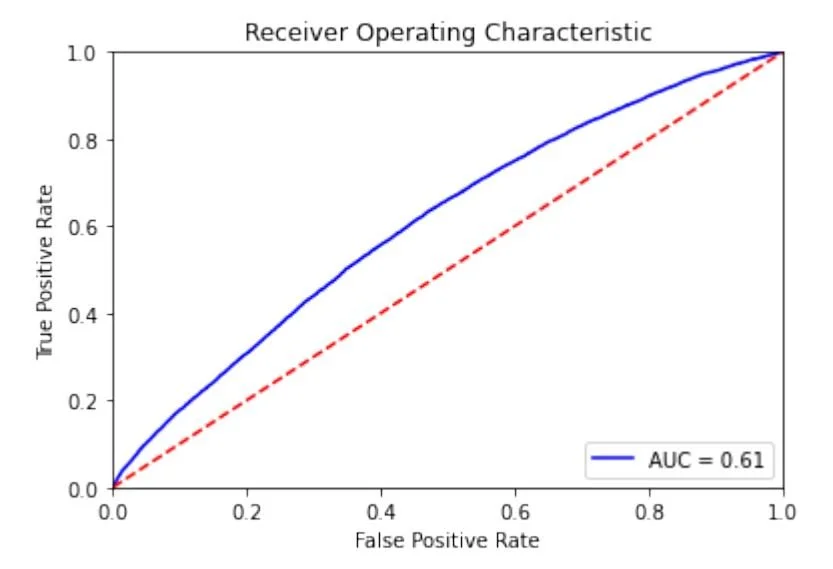

ROC Curve

The ROC (Receiver Operating Characteristic) curve and AUC (Area Under the Curve) were used as tools for evaluating the model's ability to distinguish between classes. The ROC curve plots the true positive rate against the false positive rate at various threshold settings, while the AUC provides a single measure of overall performance. A higher AUC indicates better model performance.

probs = logistic_model.predict_proba(x_test)

fpr, tpr, threshold = roc_curve(y_test, probs[:,1], pos_label=1)

roc_auc = auc(fpr, tpr)

print("AUC: ", roc_auc)

AUC: 0.6095831706050912

def plot_roc_curve(fpr, tpr, roc_auc):

plt.title('Receiver Operating Characteristic')

plt.plot(fpr, tpr, 'b', label = 'AUC = %0.2f' % roc_auc)

plt.legend(loc = 'lower right')

plt.plot([0, 1], [0, 1],'r--')

plt.xlim([0, 1])

plt.ylim([0, 1])

plt.ylabel('True Positive Rate')

plt.xlabel('False Positive Rate')

plt.show()

plot_roc_curve(fpr, tpr, roc_auc)

Results From Logistical Regression Model

The performance of our logistic regression model reveals several key metrics. The model's precision is 75%, meaning 75% of the predicted defaults were actual defaults. However, the recall is very low at ~0.03%, indicating that most actual defaults are missed. The F1 score, which balances precision and recall, is approximately 0.0006, highlighting significant issues despite the high accuracy. The ROC curve and AUC score of ~0.61 show that the model's ability to distinguish between classes is only slightly better than random guessing. Overall, these metrics suggest that while the model is good at predicting non-defaults, it struggles significantly with identifying defaults.

Random Forest Classification

Load Data and Import Libraries

import pandas as pd import matplotlib.pyplot as plt import numpy as np import seaborn as sns from sklearn.ensemble import RandomForestClassifier from sklearn.model_selection import train_test_split from sklearn.metrics import confusion_matrix, f1_score, accuracy_score, recall_score, roc_curve, auc, precision_score, plot_confusion_matrix

Building The Forest

This function encodes categorical variables into dummy/indicator variables and splits the data into training and test sets. This step is crucial for preparing the data for training the Random Forest model.

def encode_and_split(loan_df):

loan_data_dumm = pd.get_dummies(loan_df, prefix_sep='_', drop_first=True)

x = loan_data_dumm.drop(['LOAN_DEFAULT'], axis=1)

y = loan_data_dumm['LOAN_DEFAULT']

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.2, random_state=42)

return x_train, x_test, y_train, y_test

x_train, x_test, y_train, y_test = encode_and_split(loan_df)

A RandomForestClassifier is instantiated and trained on the training data. The eval_model function (defined previously) is used to evaluate the model's performance on the test data. This initial model provides a baseline performance for further tuning.

rfc_model = RandomForestClassifier() rfc_model.fit(x_train, y_train) eval_model(rfc_model, x_test, y_test)

Accuracy: 0.7762432716433274 Precision: 0.37455197132616486 Recall: 0.041166042938743354 F1: 0.07417923691215617 AUC: 0.6209486647898321

The Random Forest model achieved an accuracy of approximately 78%, similar to the simple logistic regression model previously built. Precision improved significantly by 39%, rising from around 33% in the logistic regression to a higher value, indicating that more instances classified as defaults were actually defaults. However, many instances predicted as defaults were incorrect. Recall saw a dramatic increase from 0.03% to 4.5%, meaning the Random Forest identified more actual positive cases, but still missed most of them. The F1 score also improved significantly from 0.0006 to around 0.08, showing a better balance between precision and recall, though it remained generally poor. The area under the ROC curve (AUC) increased slightly, indicating a marginal improvement in the model's ability to distinguish between classes. Despite these improvements, the probability distribution plots revealed poor class separation, with the majority of cases unlikely to be classified as defaults. Overall, while the Random Forest model outperformed logistic regression, it still struggled to effectively identify loan defaults.

Overfitting

Overfitting occurs when a model performs exceptionally well on training data but poorly on unseen test data. By evaluating the model's performance on the training data, we can identify signs of overfitting. In this case, the Random Forest model shows near-perfect results on the training data, indicating overfitting.

eval_model(rfc_model, x_train, y_train)

Accuracy: 0.9996354336998654 Precision: 0.9995794473443337 Recall: 0.9987394023283981 F1: 0.9991592482690406 AUC: 0.9999966391636474

Hyperparameters

The n_estimators parameter controls the number of trees in the forest. Initially, a single tree is used, showing poor performance. Increasing the number of trees to 10, 100, and 300 demonstrates how more trees generally improve model performance up to a point, balancing computational cost and classification accuracy. 100 trees were chosen as our number of trees. The max_depth parameter limits the depth of the trees. A shallow depth (max_depth=5) can lead to underfitting, where the model lacks the complexity to capture patterns in the data. Increasing the depth to 15 improves the model's ability to separate classes, providing a balance between underfitting and overfitting.

rfc_model = RandomForestClassifier(n_estimators=100, max_depth=15) rfc_model.fit(x_train, y_train) eval_model(rfc_model, x_test, y_test)

Accuracy: 0.7829340996332912 Precision: 0.686046511627907 Recall: 0.005810518022454205 F1: 0.011523437499999997 AUC: 0.6482218803963928

Our model does perform better on the training data so it could be a little overfitted. However, it certainly is much less dramatic than before!

We have now limited the complexity of the trees in our forest which has reduced overfitting.

We have increased the AUC to ~0.65, this model has the best ability to separate classes that we have seen so far! It is also has a very good precision score of 67%, but we are still identifying very few loan defaults hence the poor recall. Let's have a look at the training set performance!

eval_model(rfc_model, x_train, y_train)

Accuracy: 0.7922025701924159 Precision: 0.9845890410958904 Recall: 0.04263786242183058 F1: 0.08173612262787557 AUC: 0.8233634734529571

Best Model for Predicting Loan Defaults

The Random Forest model is the better option for predicting loan defaults. It shows significant improvements in precision, recall, and F1 score compared to the logistic regression model, indicating a better balance and a more effective identification of defaults. However, despite these improvements, both models still struggle with accurately predicting loan defaults, highlighting the need for further refinement or alternative modeling approaches.